|

I'm a final-year PhD student of the joint program between Shanghai Jiao Tong University and Eastern Institute of Technology, Ningbo, supervised by Wenjun Zeng and Xiaokang Yang. I also work closely with Xin Jin and Yichao Yan. Prior to that, I received my B.S. in Computer Science from Nanjing University in 2018, and the M.S. in Computer Science from Shanghai Jiao Tong University in 2021, supervised by Cewu Lu. I was also fortunate to work with Wenjun Zeng and Cuiling Lan as a research intern in Intelligent Multimedia Group at Microsoft Research Asia. Email / Google Scholar / Github / Twitter / 知乎 |

|

|

I have broad research interests on human-centric vision, including modeling human motion, human-centric interactions and embodied intelligence. |

|

* denotes equal contribution |

|

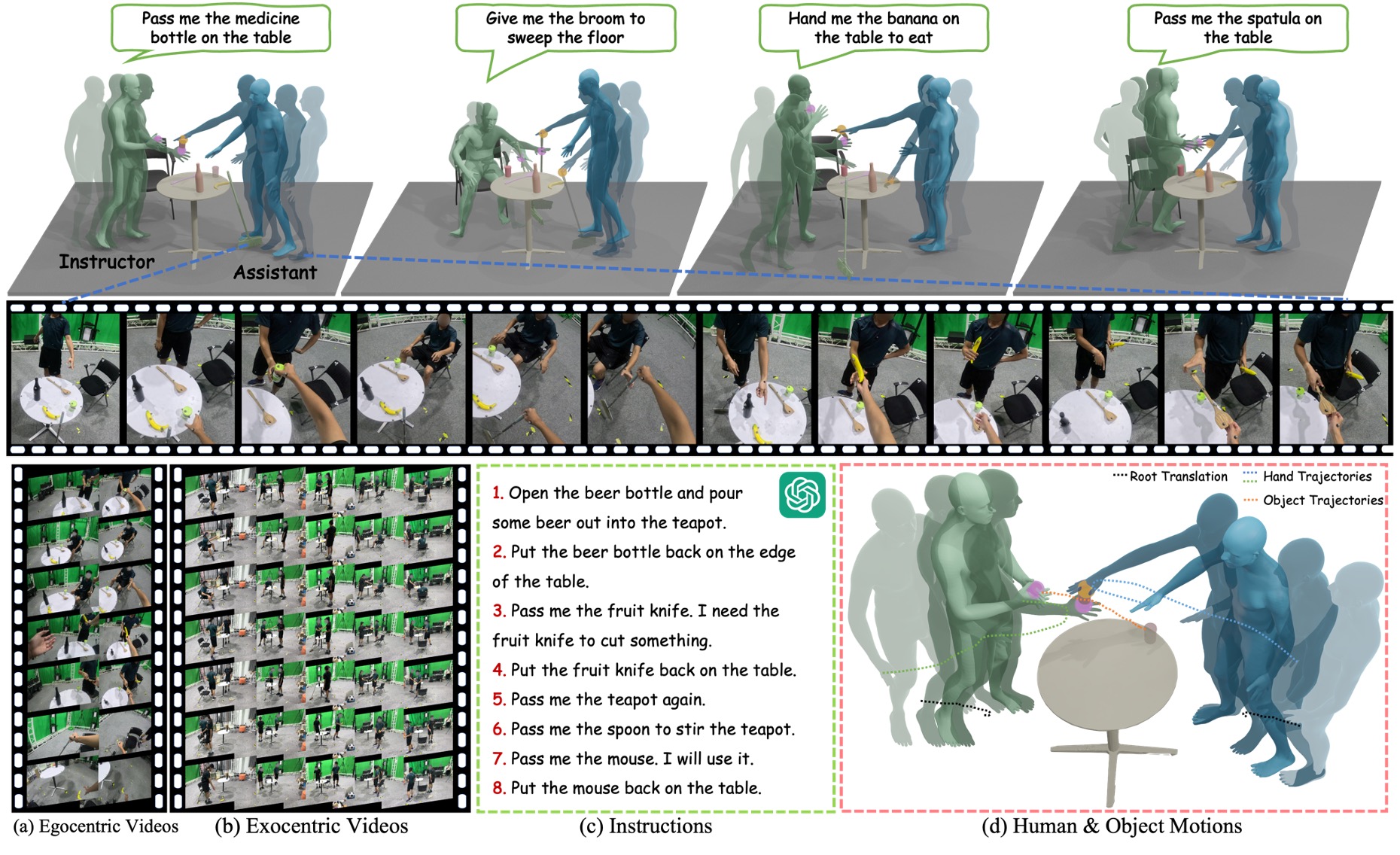

Liang Xu, Chengqun Yang, Zili Lin, Fei Xu, Yifan Liu, Congsheng Xu, Yiyi Zhang, Jie Qin, Xingdong Sheng, Yunhui Liu, Xin Jin, Yichao Yan, Wenjun Zeng, Xiaokang Yang ICCV, 2025 [Paper] [Project] [Code(Coming Soon)] We collect the first large-scale human-object-human interaction dataset called InterVLA with diverse generalist interaction categories and egocentric perspectives. |

|

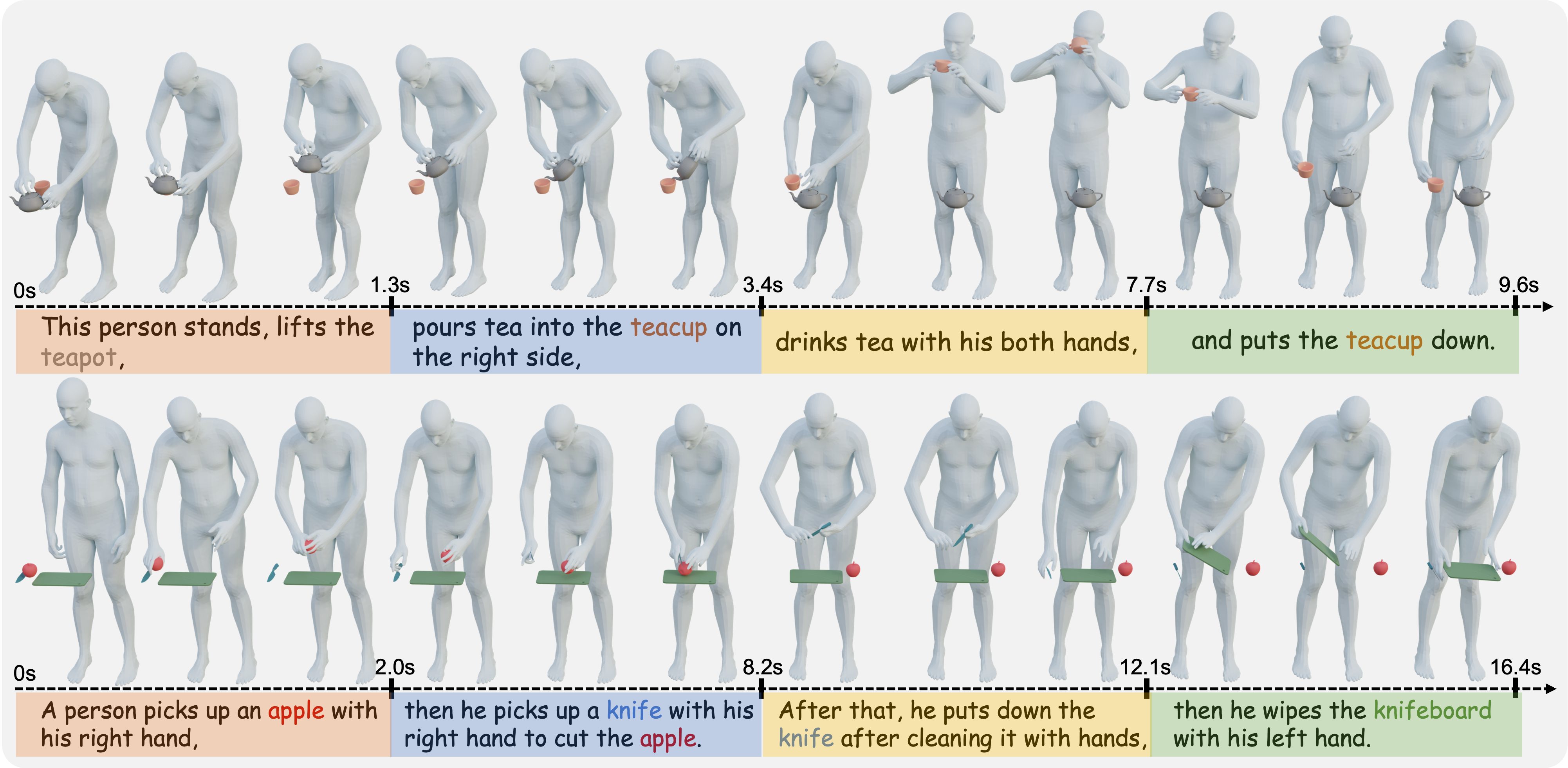

Xintao Lv*, Liang Xu*, Yichao Yan, Xin Jin, Congsheng Xu, Shuwen Wu, Yifan Liu, Lincheng Li, Mengxiao Bi, Wenjun Zeng, Xiaokang Yang ECCV, 2024 [Paper] [Project] [Code] We build a large-scale dataset of human interacting with multiple objects with 3.3K HOI sequences and 4.08M frames. |

|

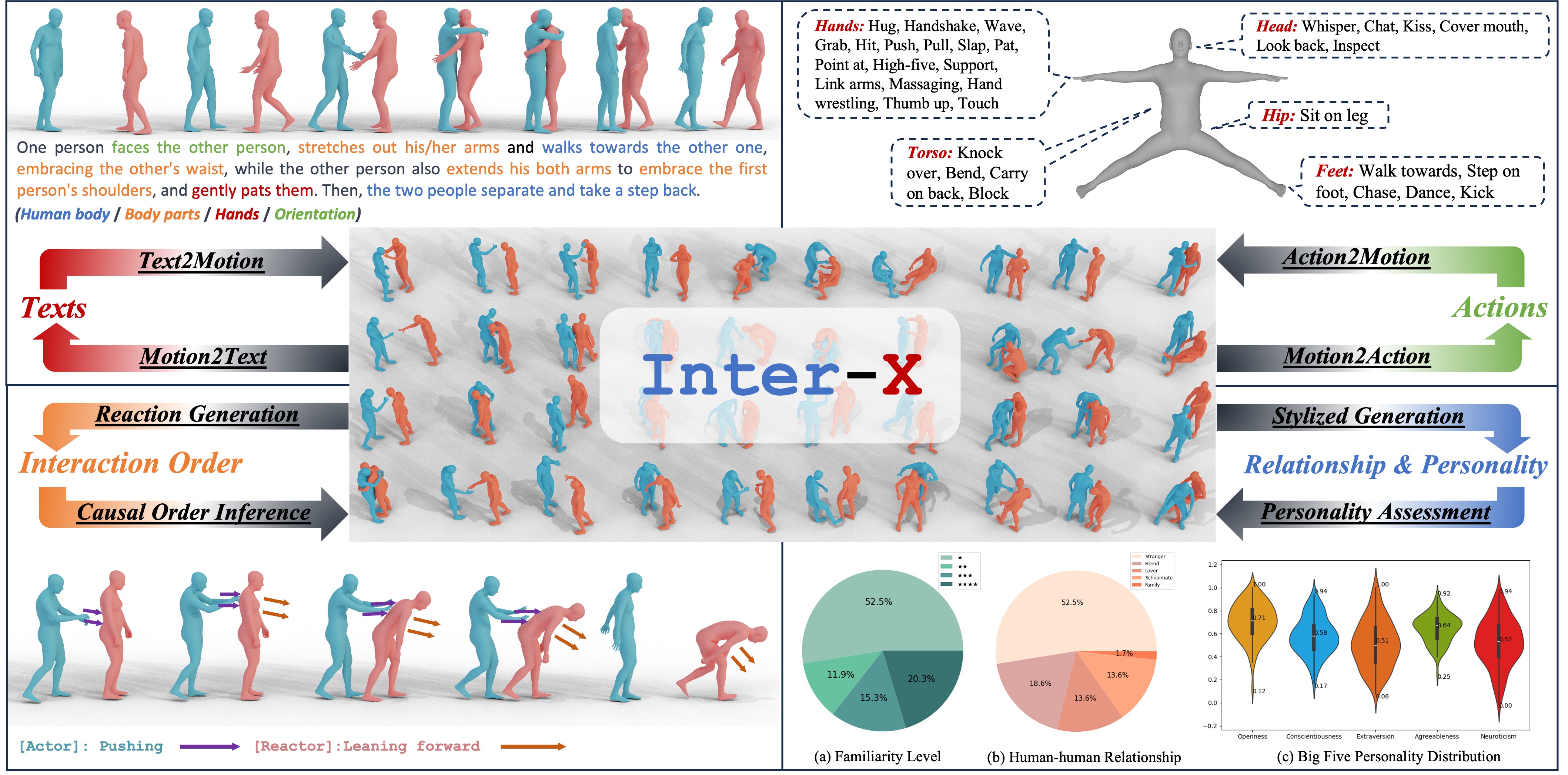

Liang Xu, Xintao Lv, Yichao Yan, Xin Jin, Shuwen Wu, Congsheng Xu, Yifan Liu, Yizhou Zhou, Fengyun Rao, Xingdong Sheng, Yunhui Liu, Wenjun Zeng, Xiaokang Yang CVPR, 2024 [Paper] [Project] [Code] We propose Inter-X, a large-scale dataset of human-human interactions with 11K interaction sequences and more than 8.1M frames. |

|

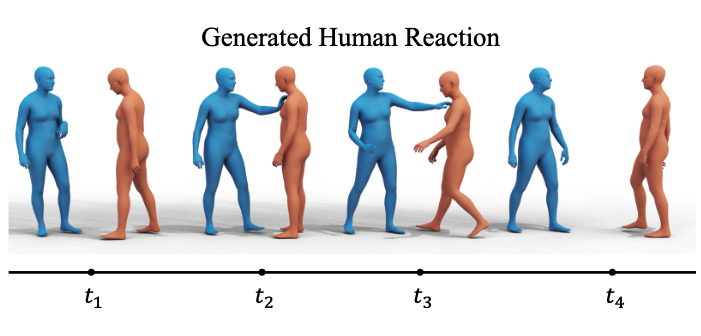

Liang Xu, Yizhou Zhou, Yichao Yan, Xin Jin, Wenhan Zhu, Fengyun Rao, Xiaokang Yang, Wenjun Zeng CVPR, 2024 [Paper] [Project] [Code] We propose the first multi-setting human action-reaction synthesis benchmark to generate human reactions conditioned on given human actions. |

|

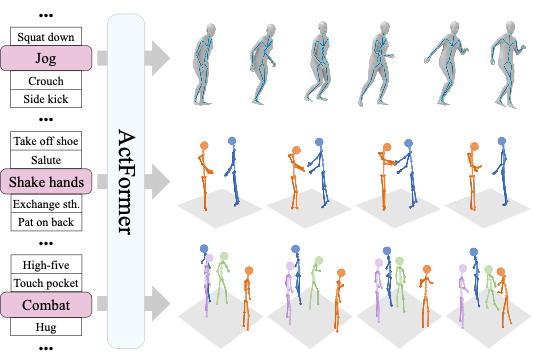

Liang Xu*, Ziyang Song*, Dongliang Wang, Jing Su, Zhicheng Fang, Chenjing Ding, Weihao Gan, Yichao Yan, Xin Jin, Xiaokang Yang, Wenjun Zeng, Wei Wu ICCV, 2023 [Paper] [Project] [Code] We present a GAN-based Transformer for general action-conditioned 3D human motion generation, including single-person actions and multi-person interactive actions. |

|

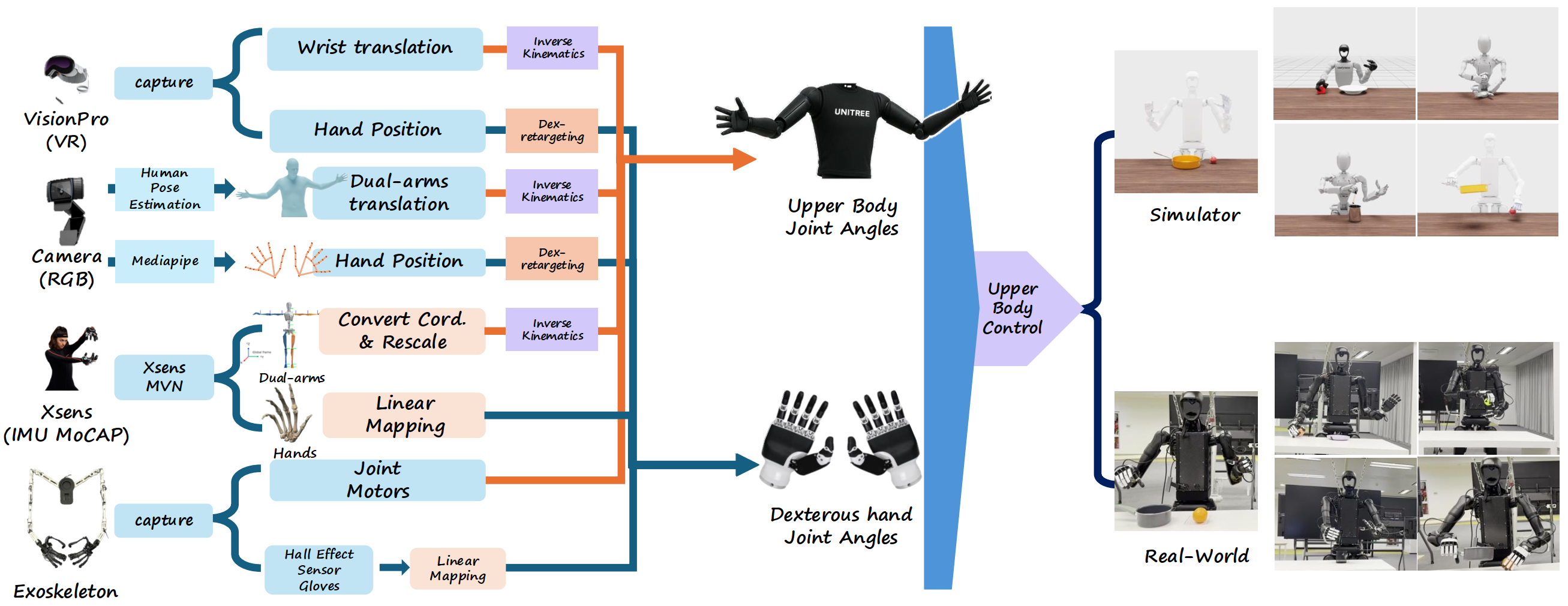

Hangyu Li*, Qin Zhao*, Haoran Xu, Xinyu Jiang, Qingwei Ben, Feiyu Jia, Haoyu Zhao, Liang Xu, Jia Zeng, Hanqing Wang, Bo Dai, Junting Dong, Jiangmiao Pang arXiv, 2025 [Paper] [Project] [Code] We introduce TeleOpBench for benchmarking dual-arm dexterous teleoperation, which integrates motion-capture, VR controllers, upper-body exoskeletons, and vision-only teleoperation pipelines within a single modular framework. |

|

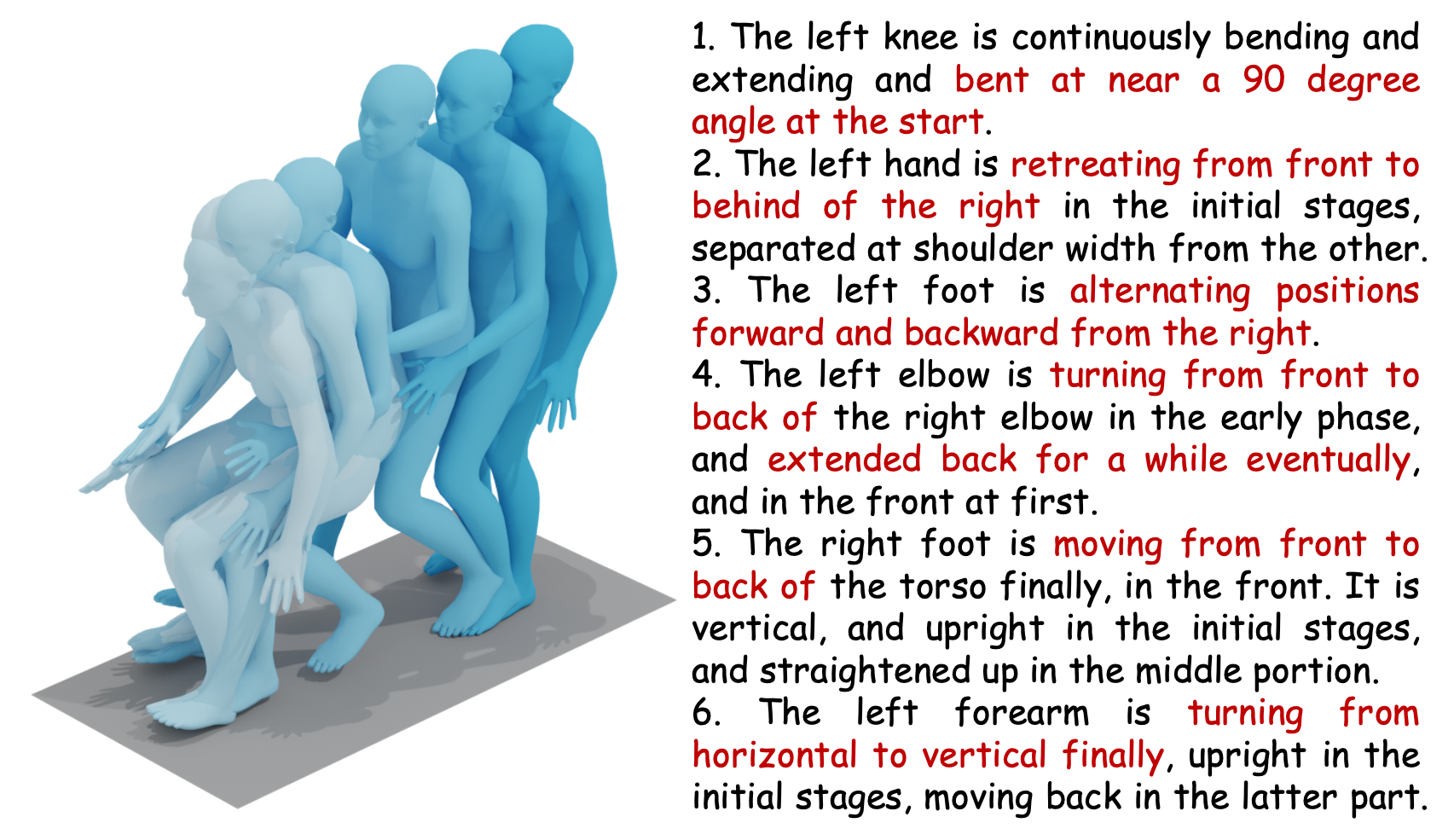

Liang Xu, Shaoyang Hua, Zili Lin, Yifan Liu, Feipeng Ma, Yichao Yan, Xin Jin, Xiaokang Yang, Wenjun Zeng arXiv, 2024 [Paper] [Code] We tackle the problem of how to build and benchmark a large motion model with video action datasets and disentangled rule-based annotations. |

|

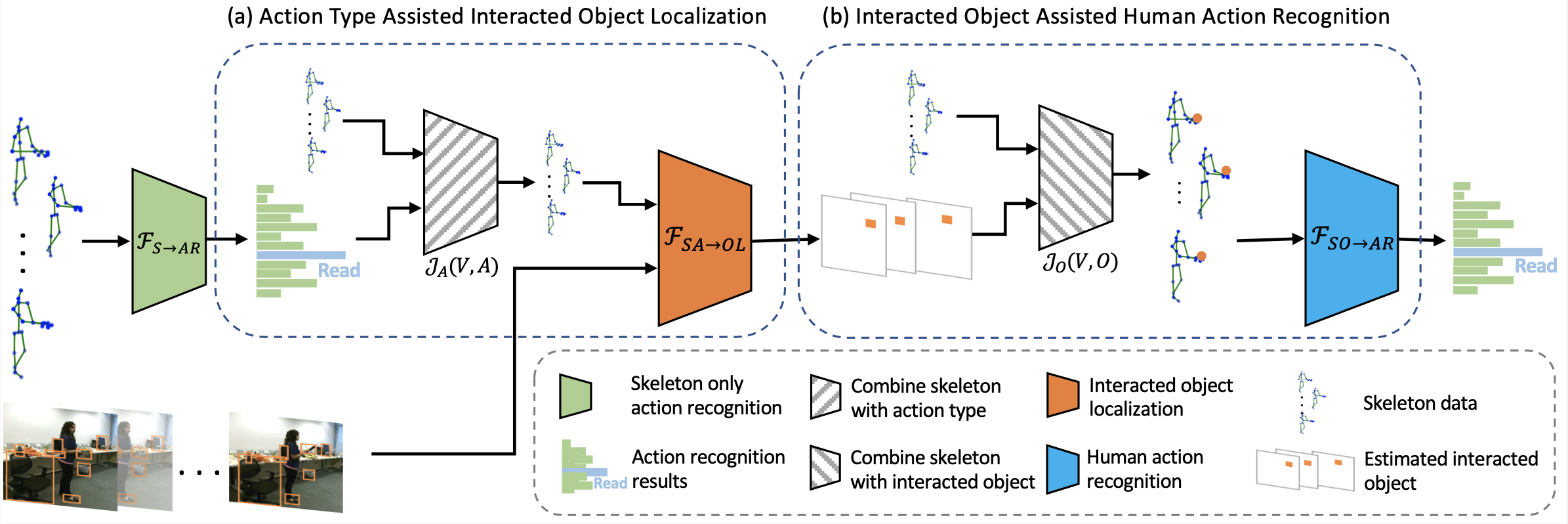

Liang Xu, Cuiling Lan, Wenjun Zeng, Cewu Lu TMM, 2022 [Paper] We propose a joint learning framework for mutually assisted interacted object localization and human action recognition based on skeleton data. |

|

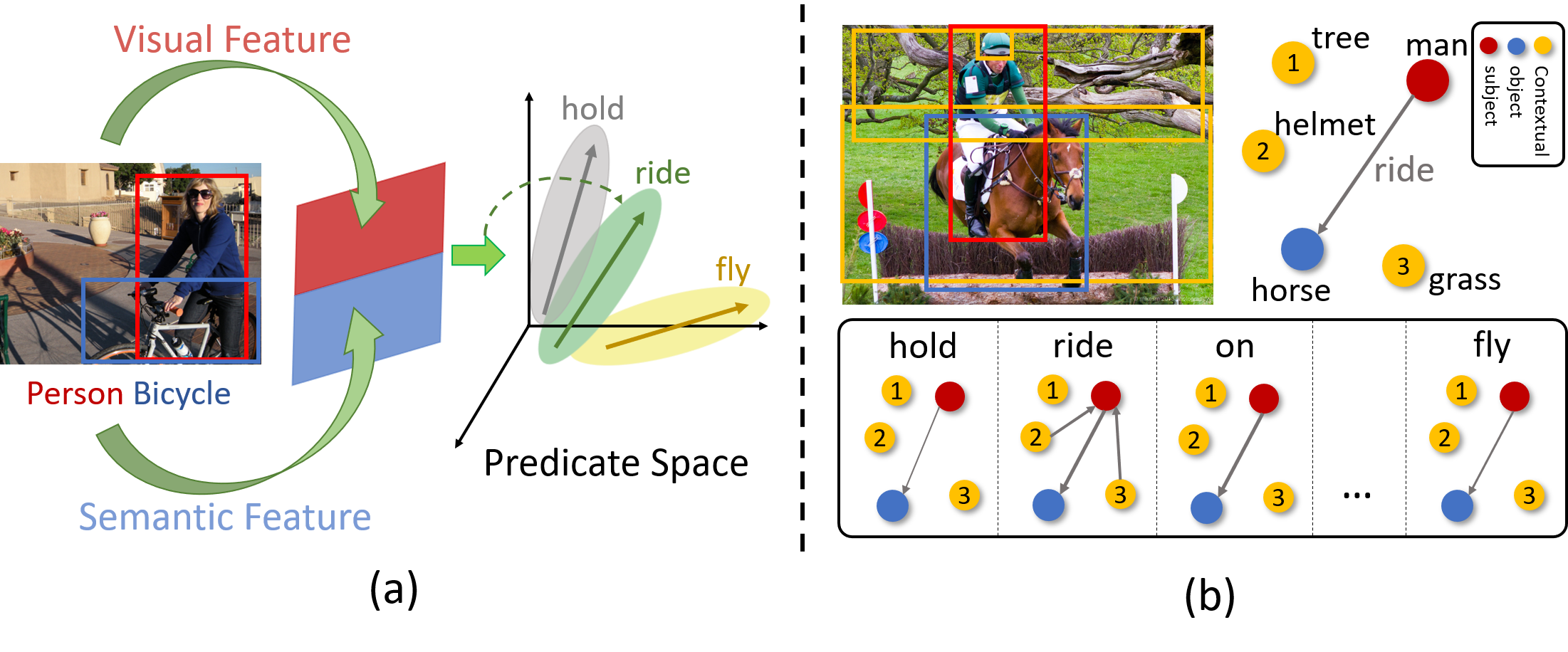

Liang Xu, Yong-Lu Li, Mingyang Chen, Yan Hao, Cewu Lu ICME, 2021 (Oral Presentation) We propose a novel and concise perspective called "predicate-aware learning network (PAL-Net)" for visual relationship recognition. |

|

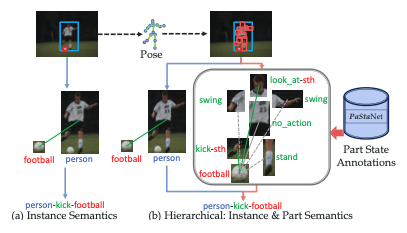

Yong-Lu Li, Liang Xu, Xinpeng Liu, Xijie Huang, Yue Xu, Shiyi Wang, Hao-Shu Fang, Ze Ma, Mingyang Chen, Cewu Lu CVPR, 2020 TPAMI, 2023 [Paper] [Code] [Project] We build a large-scale knowledge base PaStaNet, which contains 7M+ PaSta annotations. We infer PaStas first and then reason out the activities based on part-level semantics. |

|

Yong-Lu Li, Siyuan Zhou, Xijie Huang, Liang Xu, Ze Ma, Hao-Shu Fang, Yan-Feng Wang, Cewu Lu CVPR, 2019 TPAMI, 2022 [Paper] [Code] We explore Interactiveness Knowledge which indicates whether human and object interact with each other or not for Human-Object Interaction (HOI) Detection. |

|

Shanghai Jiao Tong University & Eastern Institute of Technology, Ningbo, Shanghai/Ningbo, China

Shanghai Artificial Intelligence Laboratory, Shanghai, China

WeChat, Tencent Inc., Beijing, China

SenseTime Technology Development Co., Ltd., Shanghai, China

Microsoft Research Asia, Beijing, China

Shanghai Jiao Tong University, Shanghai, China

Nanjing University, Nanjing, China

|

|

Reviewer: CVPR, ICCV, ECCV, NeurIPS, ICLR, WACV, ACM Multimedia, TMM, IJCV |

|

|