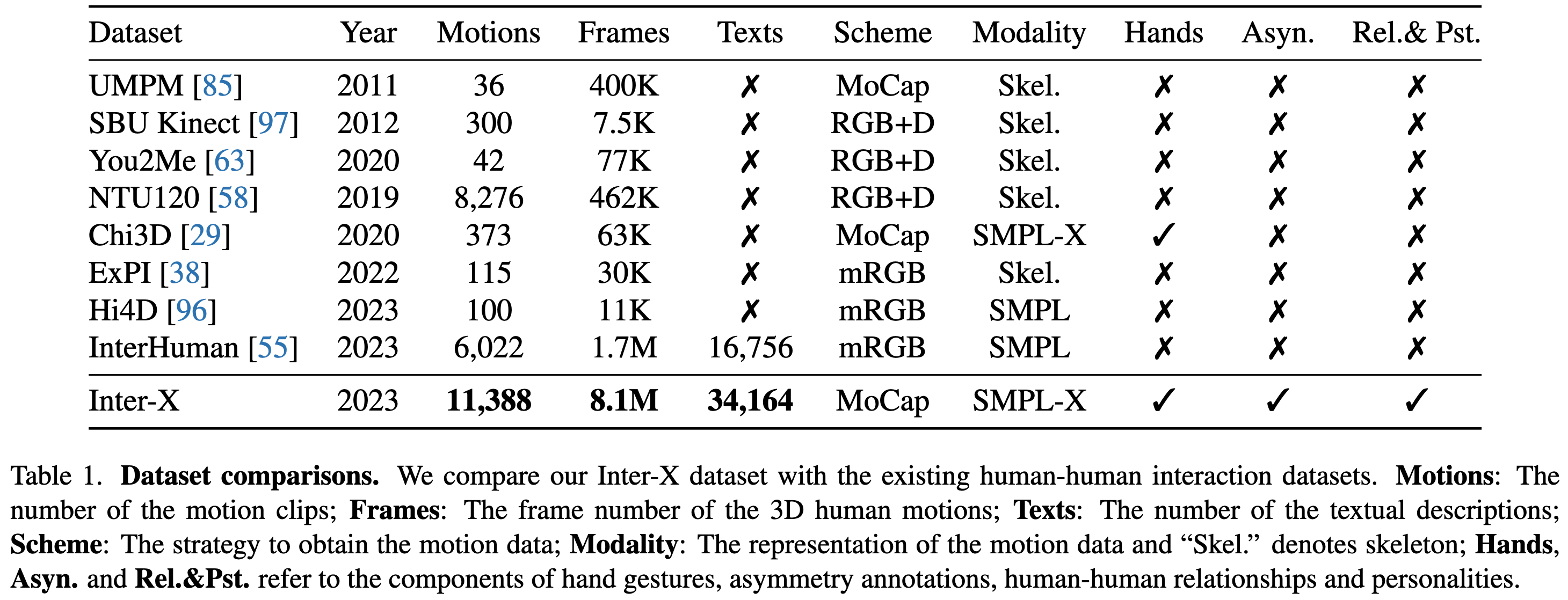

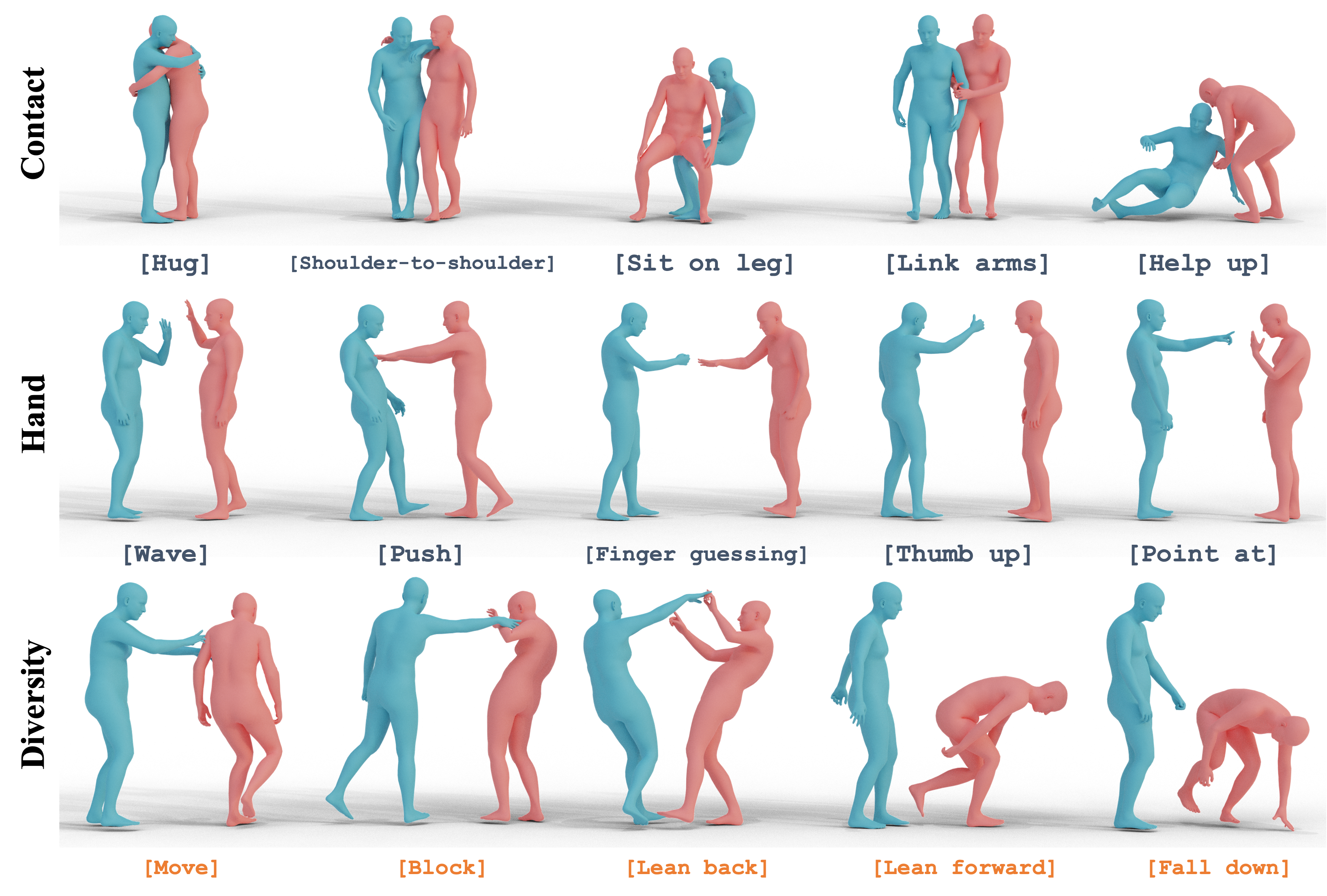

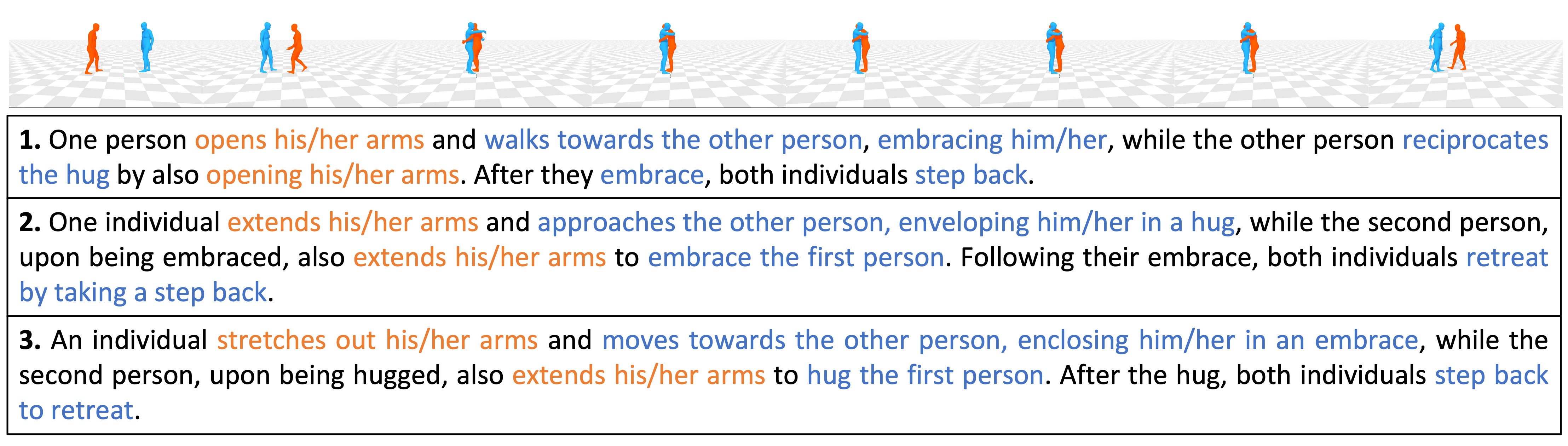

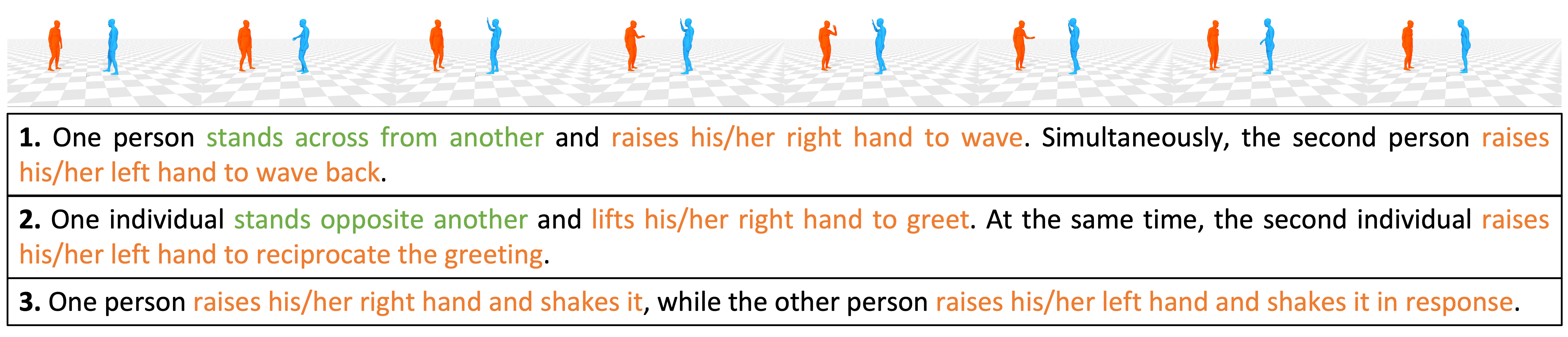

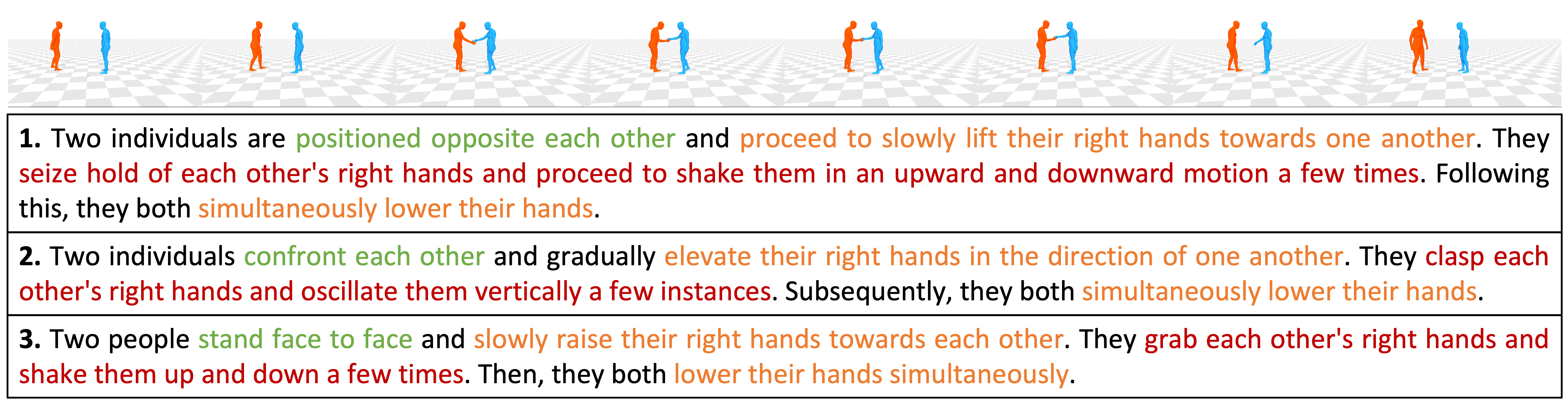

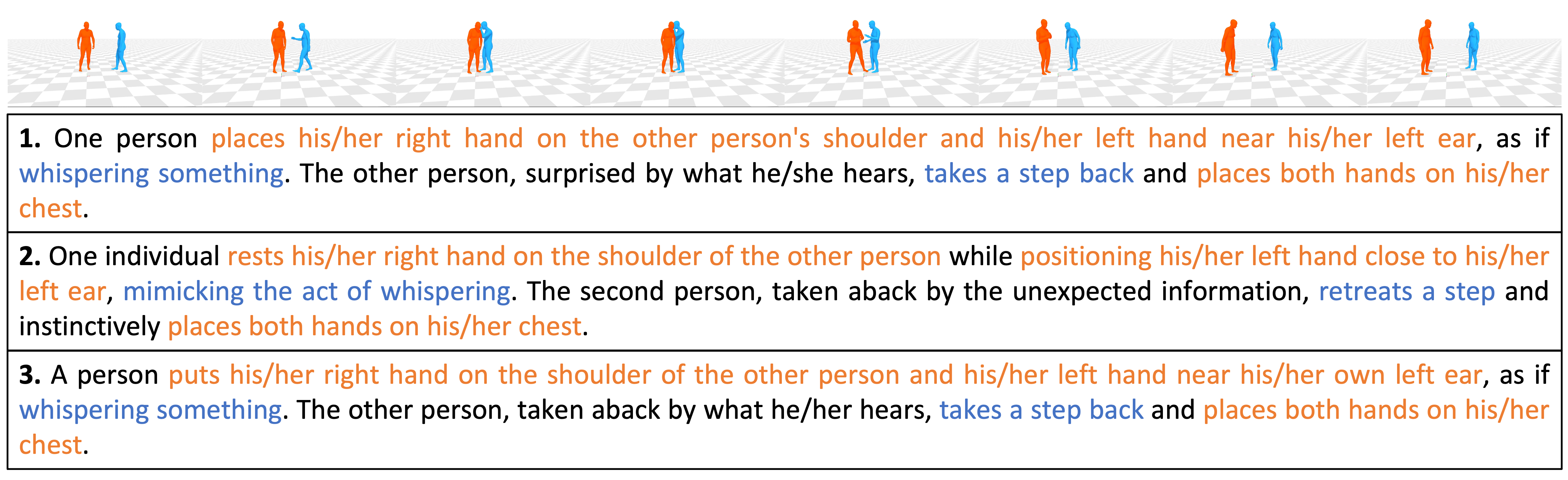

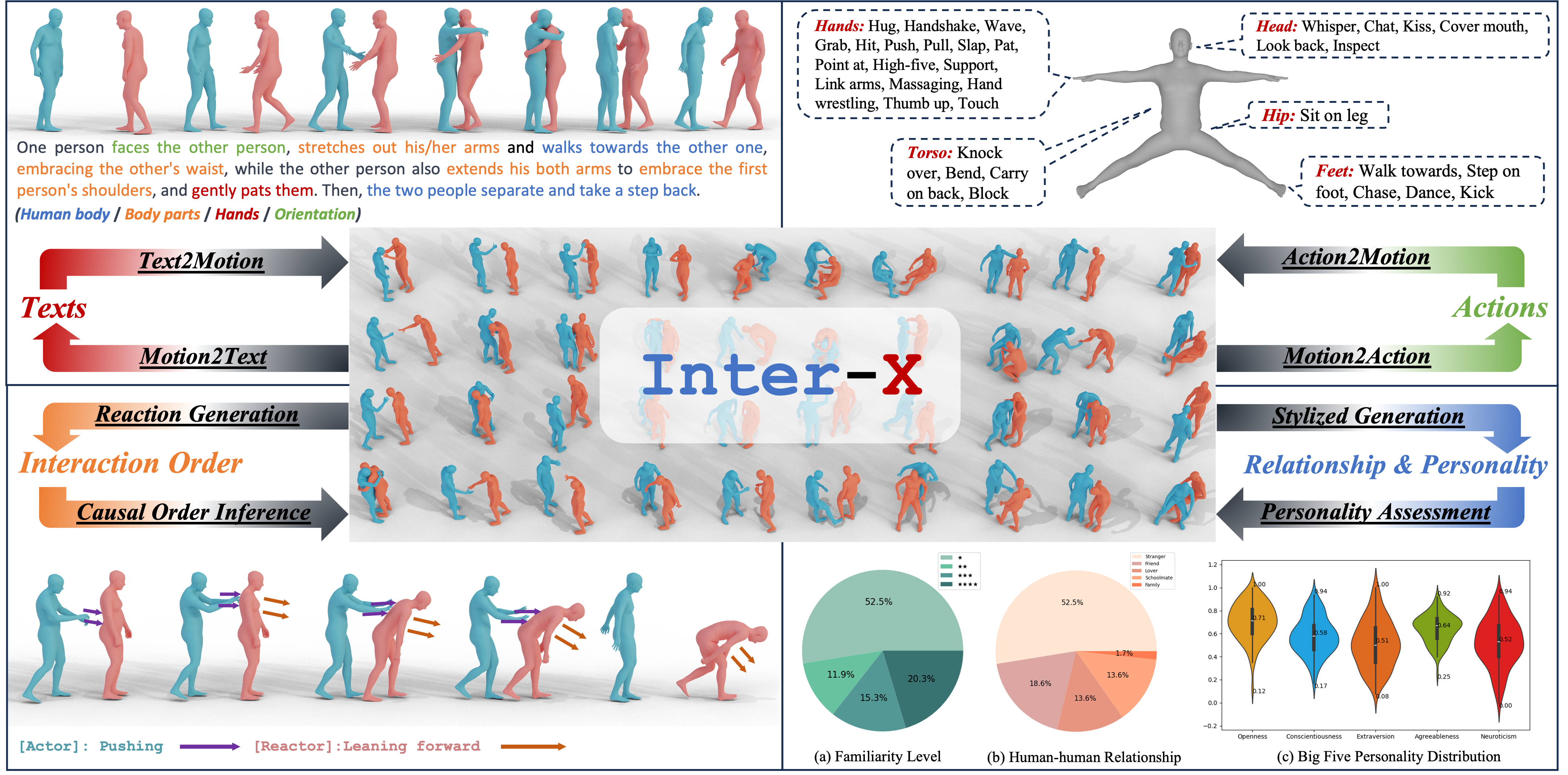

Figure 1. Inter-X is a large-scale human-human interaction MoCap dataset with ~11K interaction sequences and more than 8.1M frames. The fine-grained textual descriptions, semantic action categories, interaction order, and relationship and personality annotations allow for 4 categories of downstream tasks.